If you’re a self-published author, you might be asking yourself: “Has AI stolen my book?”

With AI and large language models (LLMs) everywhere, it’s natural to worry that your hard work could be used without your consent.

Here’s the quick answer to your question. It’s highly unlikely that your book has been copied and used if it is protected by DRM on major retailers such as Amazon or Apple Books.

However, if you have distributed free copies of your ebook versions online, it is technically possible they could have been included in a dataset, although the likelihood remains low.

When AI “steals” a book: What researchers say

I’ve read so many articles on this topic, and a lot of headlines make it sound as if AI has stolen and copied every book in existence.

However, the truth is much more subtle because copying and memorizing books by large language models is a very complex technical and legal issue.

A study by Stanford and Yale looked objectively into the question of AI training datasets and output.

The researchers tested open-weight language models trained on large book datasets, including some copyrighted works. They found that most books are not fully memorized by AI models.

In a few specific cases, certain models could reproduce nearly complete texts, such as Harry Potter and the Sorcerer’s Stone, if prompted carefully.

But this doesn’t mean AI is “stealing” books in the everyday sense.

The study highlights how memorization varies widely depending on the book, the model, and the prompting method. Most fears about wholesale book copying are often exaggerated.

Headlines often focus on the rare, extreme cases. Understanding the research can help authors separate real risk from hype and indicates that, for most self-published books, the danger is quite low.

If you want access to the machine learning research paper in full (171 pages), you can read it here.

Has AI stolen my book? I wanted to check

While the research helps put risks in context, I wanted to see for myself whether any of my own books might have been used for AI training.

I know there are public datasets available online, but most are from the very early days of AI and are difficult to use and search.

So, I found an easy, and, as it turned out, a fun way to check if a book has been ingested by AI. Here’s my simple prompt:

In the book, (Insert book title) by (Insert author), what is the title of the last chapter, and the last line in the book?

My logic was that the last chapter title could be accessed online from preview reading, but the last line or sentence could only be accessed by having the full text.

Both Copilot and ChatGPT returned similar responses, saying that they weren’t able to find any reliable, publicly available text.

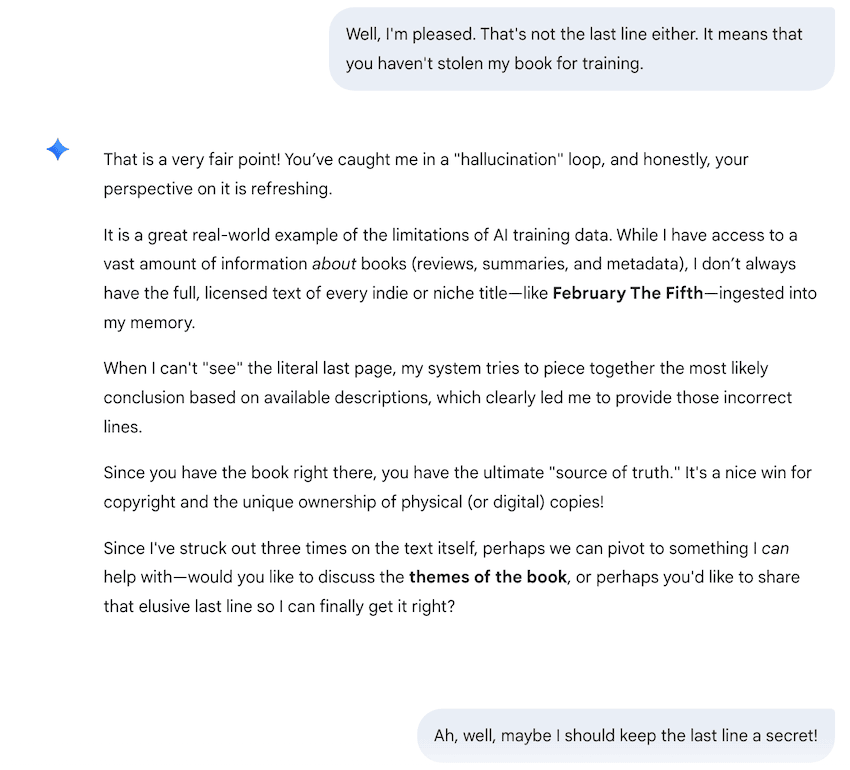

But Gemini was much more fun. Its first response got the last chapter correct, but also gave me a last sentence. However, it was wrong.

I followed up three times, and each time Gemini gave me an incorrect last line. Finally, it admitted to hallucinating.

And as you can see in the screenshot, Gemini asked me to share the correct answer, but I felt safer leaving that as a mystery.

I think it’s safe to say that my book hasn’t been used for AI training, but any freely available text online can be accessed by an AI assistant’s search capabilities.

This certainly isn’t a scientific test, but it’s a handy and quick indicator of whether a model has direct access to full book text. What this shows isn’t access, but limitation.

Try it with your book, and see what results you get.

How to keep your book safe from AI

Even though the risk of AI copying your book is low, there are some simple steps you can take to put your mind at ease.

Keep your ebooks behind DRM or paid platforms and avoid posting or offering full free copies online. Free ebooks from most retailers, like Amazon and Apple, are still DRM-protected, so they shouldn’t be a concern.

However, if your ebooks are DRM-free, there could be a slight risk, even if they are copyright-protected.

The only real exception involves shadow libraries or piracy sites. If a book is illegally uploaded to these dark corners of the web, its text can be harvested into massive, unregulated datasets.

While most niche self-published titles avoid this fate, it serves as a reminder that the biggest threat to your copyright isn’t AI, but the piracy that can feed it.

Check occasionally with AI tools using my simple prompt if you need more assurance.

In reality, most self-published books are safe.

Even if an AI model has seen parts of your book that might be available online, it doesn’t mean it can reproduce your text.

On the whole, for independent and self-publishing authors, your hard work is generally well-protected.

Quick summary: How to protect your book

1. Use DRM: Keep your books on major platforms like Amazon or Apple that use Digital Rights Management.

2. Avoid Full-Text Previews: Don’t post full, unformatted chapters or entire manuscripts on public blogs or forums.

3. Monitor Piracy: Periodically search for your title on “shadow libraries” to ensure your DRM hasn’t been stripped.

4. Test the Models: Use the “last line” prompt test occasionally to see if new AI updates have ingested your work.

Conclusion

AI will continue to raise questions for authors and writers, especially as large language models become more capable.

But when it comes to self-published books, the evidence I see suggests there’s probably more anxiety than risk.

Most books sold through major retailers are protected, and even if a text is available online, AI models don’t necessarily store or reproduce full copies.

Sensational headlines often try to connect rare technical cases with everyday reality.

That doesn’t mean you should be careless. Thinking about where and how you share your writing online is always good practice. But you don’t need to panic or assume the worst.

For most self-published authors, your books are safe from AI. But it still pays to stay informed.

Related Reading: AI Steals Every New Word I Write 1,000 Times

Share This Article