Every day, I seem to spend more and more time monitoring and blocking spam and bad bot traffic.

It doesn’t matter if your blog or website is big or small; bots are hitting it all the time.

When I check a couple of my smaller sites that receive very few visitors per day, there is always a steady stream of automated bot traffic.

But to give you an idea of how much web traffic is automated, malicious, or spam, I completed a full audit of Just Publishing Advice. Here are the results.

Monitoring spam and bad bot traffic

You probably check your traffic numbers with Google Analytics (GA).

It’s one of the best free tools for gauging the performance of your blog or website.

There’s nothing better than to see a steady increase in the number of users and page views.

But it doesn’t tell you how many automated, suspicious, or malicious visits your site receives.

If you want to discover the traffic that GA ignores or misses, you need to dig deeper with other data sources.

I use a handful of tools to monitor and protect my site from suspicious bot traffic. Luckily, most of them are free.

The only paid service I use is Statcounter, which only costs me $9.00 per month.

It collects similar data to GA, but the significant advantage is that it reports IP addresses and outbound link activity.

Because of this, I can monitor and manage scrapers and automated bot hits and check for invalid Adsense ad clicks.

Now, on to the data to show you what I discovered.

Spam and bot traffic activity in detail

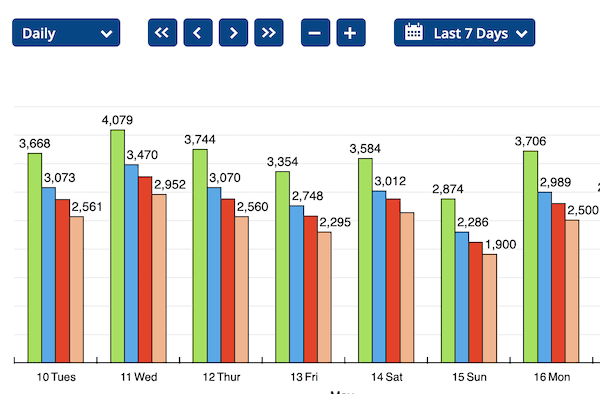

On average, my site receives around 3,500 human user visits per day.

I would always like to have more, but it’s not too bad.

But this is not the full picture.

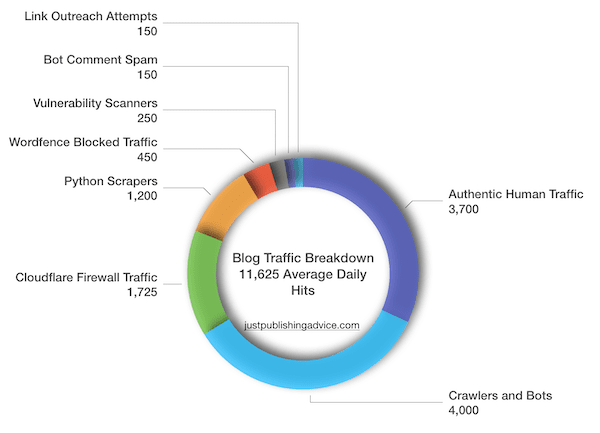

When I check and collect access data from other sources, the actual number of hits on my site is around 11,500 per day.

As you can see, a lot more is happening on my site than most analytics tools report.

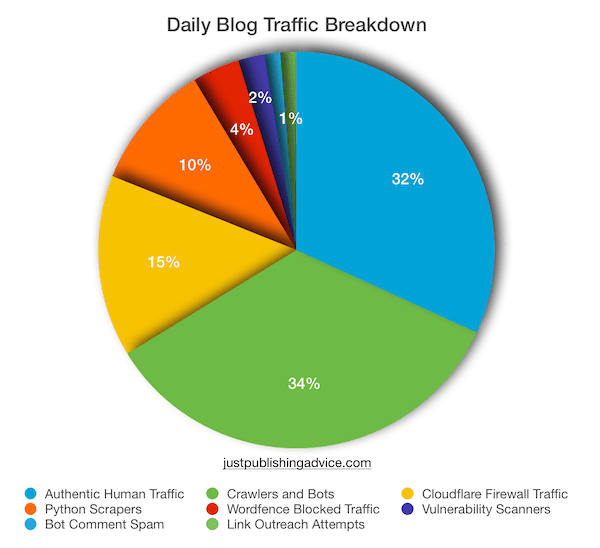

A better way to look at this data is by using percentages.

Here’s a percentage breakdown of my average daily site traffic.

Of all the visits to my site each day, only 32% is real visitor traffic.

However, this number seems to be about average.

Help Net Security reported in 2021 that automated traffic makes up 64% of internet traffic.

Bot traffic hits every site, so it’s a fact of life.

But it’s still worthwhile checking your site traffic from time to time.

How to access spam and bot traffic data for your site

As I mentioned before, I use mostly free tools.

These form my lines of defense against bots and spam.

1. Cloudflare

You might think Cloudflare is only a CDN to make your site load faster.

But that’s only a side benefit of a free account. The real advantage of using Cloudflare is security.

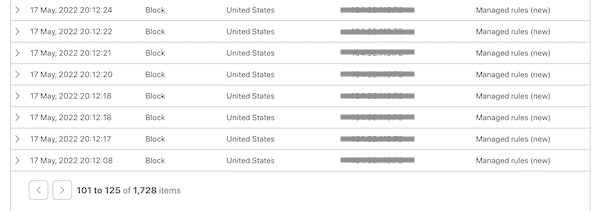

Its web application firewall (WAF) is my first line of defense.

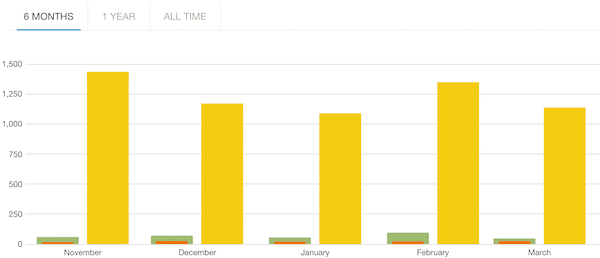

I have masked out the IP addresses due to privacy. However, you can see that the total number of blocks and challenges issued for this one day is 1,728.

With the WAF, you can set firewall rules or use the tools to block or challenge IP addresses or ASNs.

It’s by far the best tool to manage unwelcome traffic on your site.

2. Wordfence

My second line of defense is the Wordfence plugin on my site.

It blocks any malicious traffic that might get past Cloudflare.

The number of blocks varies from day to day, but on average, it blocks between 250 and 450 attempts every day.

3. Server protection

The last line of defense is my ISP Apache server.

From the access and error logs, I can scan for any untoward activity the server has blocked and check if any allowed activity looks suspicious.

Then, I can use Cloudflare or Wordfence to look after any suspicious activity I discover.

Catching spammers

Spammers are more of a nuisance than a threat.

But there are relatively easy ways to manage them.

WordPress comment spam plugin

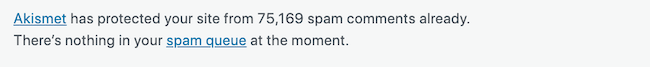

Akismet is a free plugin that works quite well to combat comment spam on your blog.

The accuracy rate is around 99.5%, so it works very well.

There are about 4,000 legitimate comments on my site. But Akismet has blocked over 75,000 spam comments!

If you get a lot of spam, the only drawback is that you have to keep deleting spam comments caught by Akismet.

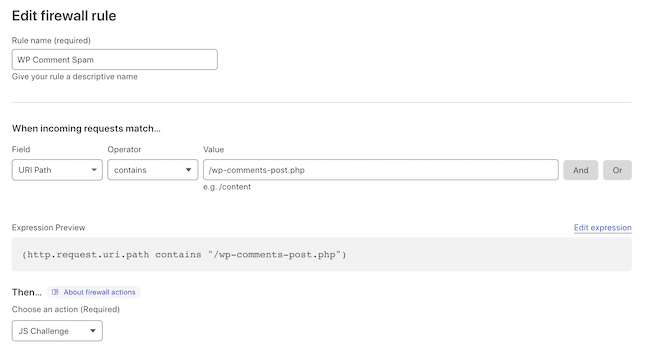

Cloudflare firewall rule to stop comment spam

The more traffic you get to your site, the more spam comments you will get.

In this case, you can take a stronger approach to the problem with a simple Cloudflare firewall rule that will block comment spammers from your site.

The upside to this rule is that it is highly effective against comment spam. The only slight downside is that it adds a little bit of friction for genuine commenters.

They will receive a quick 2-5 second Cloudflare notice saying; Checking your browser, before they can post a comment.

Most people are familiar with this, so it’s not a big issue.

But because spammers don’t use a regular browser to inject comments, the firewall rule will block them.

To use this method, add the following rule to your Cloudflare firewall.

Rule name: You can choose any name to identify your rule.

Field: URI Path

Operator: Contains

Value: /wp-comments.php

Action: JS Challenge

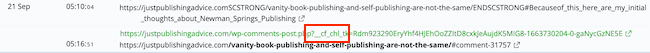

After activating the rule, you can check how well it works.

![]()

If you hover over the percentage, you will see how many challenges were solved.

![]()

The solved number is usually for genuine comments that passed the JS challenge. You can check this in your site’s logs.

Here is the log of a genuine comment that passed and successfully entered my moderation queue.

The red rectangle highlights the successful Cloudflare check.

It’s not a rule for most sites, but it’s highly effective if your site is being hit by a lot of comment spam.

As you can see, I have had to delete over 75,000 spam comments over time.

But with this rule, hardly any get through now.

The one thing to note is that with this rule, you might see up to four hits blocked by Cloudflare for each failed spam comment attempt.

This is normal because Cloudflare is blocking the actions of the script that the spammer is using.

However, you will see one entry in your firewall for a genuine comment because the user has passed the JS challenge.

So don’t panic if you see the rule blocking 300-400 attempts per day.

You might still get the occasional spam comment if a spammer posts manually. But Akismet will usually catch it.

If you have had enough of comment spammers, this firewall rule will do the job for you.

SEO spam emails

Link outreach campaigns are now nothing more than spam.

It was considered a legitimate practice to ask for backlinks in years gone by.

But now, SEO tools like Semrush make it so easy to automate these campaigns directly to your email address.

There’s nothing you can do other than delete these emails as they arrive.

In my case, it can be 100-200 per day, asking for links, guest posts, or sponsored post placement.

For me, the number I receive is definitely what I consider as nothing more than pure spam.

What can you do about scrapers?

Python and other forms of web scraping are becoming more and more common.

It isn’t easy to know what to do about it.

Recently, LinkedIn tried to stop web scrapers, but a US court ruled that scraping was legal.

It’s relatively easy to find scapers that are accessing your site. You can search your server access logs for user agents such as python-requests or python/3.

You can also set up a temporary Cloudflare firewall rule and issue a javascript challenge. (http.user_agent contains “python-requests”) or (http.user_agent contains “Python/3”)

But there is little you can do other than monitor it. The only time you really need to challenge or block a scraper is when it is hitting your site too often.

I had one that was hitting my site over 14,000 times per day from over 50 different IP addresses.

There are legitimate reasons for scraping, such as SEO research or data gathering. But there are also content scapers that copy, steal and republish your content.

But it’s not that easy to tell the difference.

Artificial intelligence bots

The new challenge is AI (artificial intelligence) gathering bots.

There is no law or rule governing content gathering and scraping by AI companies.

But it’s almost guaranteed that AI bots have harvested data and content from your site.

Unlike search engine bots, AI bots do not identify themselves, so tracking or blocking them is impossible. They also do not respect robots.txt directives.

Until there is progress on this front, there is no reliable way to identify, block, or prevent AI bots from crawling your site.

Vulnerability scanners

This is another form of bot traffic that is mostly good but sometimes bad.

Web security companies naturally and helpfully scan for software, plugin, and theme vulnerabilities that can be patched and fixed.

But then there are hackers that are looking for the same vulnerabilities to access and control websites.

Again, it’s not easy to tell the good guys from the bad guys.

In most cases, the best approach is to let Cloudflare and Wordfence manage the issue. However, there are times when I have to add a manual block just to be sure.

Good bots and bad bots

Search engines like Google and Bing use bots to check your site. Without these, your site would never stand a chance of being indexed and your pages ranking for search.

You want your site and blog posts to rank on Google and Bing, so yes, these are really good bots.

Other good bots help you analyze your traffic. These might include Ahrefs, Semrush, and Ubersuggest, among others.

But yes, there are also bad bots, such as hackers and spammers, who don’t have your best interests in mind.

Learning how to tell the difference is not always easy. But excessively blocking bots will often do you more harm than good.

Again, all you can do is monitor, check, and then be selective about which ones you block or challenge.

I use a couple of free online tools to help me check.

One is AbuseIPDB. You can check any IP address to see if it has been reported as abusive.

Another is Scamalytics. With this app, you can check the fraud score of an IP address.

Conclusion

You cannot stop spam and bad bot traffic on your website or blog.

All you can do is monitor it and try to manage it as best you can.

But don’t be surprised if you discover that around 65% of your site traffic is automated bots.

The latest report from Imperva confirms that bad bot activity is increasing every year.

All site owners can do, and should do, is learn how to manage the threats as effectively as possible.

Related reading: Cloudflare Cache Everything Improves WordPress TTFB By 90%

Share This Article

A great post, and it will be useful for a lot of people, but for non- techy people like me, it’s just too difficult.

What are scrapers, for example? How do you use JavaScript to temporarily block stuff?

I wanted to add, I think it was a MailChimp link, onto my site and it told me to add it to a part of the software. I had no idea how to do it so I didn’t bother.

There seems to be some idea that if you have a website, you understand these things.

Scrapers don’t visit and read a page on your site like normal visitors. They aim to download data and code from your site.

It could be for names, addresses, or locations. But other scapers are after your text content to copy and republish.

As for javascript blocking, you need to use a service or programs like Wordfence or Cloudflare.

I agree that you need a bit of technical know-how.

But once you set it up, or get someone to do it for you, it’s relatively easy to protect your site.